In 2011, the film Moneyball brought data-driven decisions into the spotlight. The 2003 book by Michael Lewis told the story of Billy Beane, the Oakland Athletics general manager. Beane assembled a competitive baseball team on a limited budget using sabermetrics, a data-driven player evaluation method.

One key metric Beane used was on-base percentage (OBP). OBP measures a player’s ability to get on base, which is crucial for scoring runs and winning games. Focusing on overlooked players with high OBPs allowed Beane to build a successful team despite having less money than other teams to pay top stars.

Sabermetrics was pioneered by Bill James, who questioned traditional stats like batting average. James found stats like OBP better reflected a player’s value. By embracing James’ findings while other teams stuck with outdated methods, Beane gave Oakland a competitive edge.

I would love to use a Moneyball-like analytics tool. It would be incredibly useful to have data illuminate my team’s true standout performers. But, quantifying individual productivity can be tricky for many companies like ours who are in knowledge work industries.

Take software engineers as an example. Unlike baseball players or factory workers, their “stats” aren’t as clear-cut. Lines of code written don’t reflect value added. Cranking out features fast can jeopardize quality and tech debt. Still, leaders need to assess talent. It requires a nuanced approach, weighing intangibles like teamwork, vision, and mentoring.

While Moneyball’s formula doesn’t apply directly, the lesson remains relevant. Finding hidden value by questioning “the way it’s always been done” can give organizations an advantage. But metrics require thought. Data should inform decisions, not drive them. With a balanced approach, Moneyball for business can be a game-changer!

Why Metrics Fall Short for Knowledge Workers

There’s no clear output for the knowledge type of work because there’s rarely one person responsible for the end result.

How would you measure a tech recruiter’s output? Is it the number of people hired? But what if the hiring company is poorly interviewing people or the offer isn’t good enough? We could measure the number of candidates a recruiter submits for managers to review. But the candidates might be of poor quality, so you have to define quality first. Since it’s subjective, managers decide if candidates are good or bad, and their decisions are affected by a human factor a lot.

The same problem with measuring developer productivity — it’s not clear how to do it. But in business, if you can’t measure something, you can’t improve, and the engineering process needs constant refinement and improvement. It’s a living organism. We constantly try to understand how to deliver more value to users faster. So, how do we make developers more productive and happy?

A lousy manager who never worked as a software engineer would measure byproducts like lines of code, pull requests, releases, and their frequency, bugs, and story points. These metrics don’t work. They’re easy to game. The same job can be done with a few lines of code or hundreds. One can create a pull request for every detail. Bugs can be zero, but solutions are short-term. Story points are made up. Engineers can mark every task as complex.

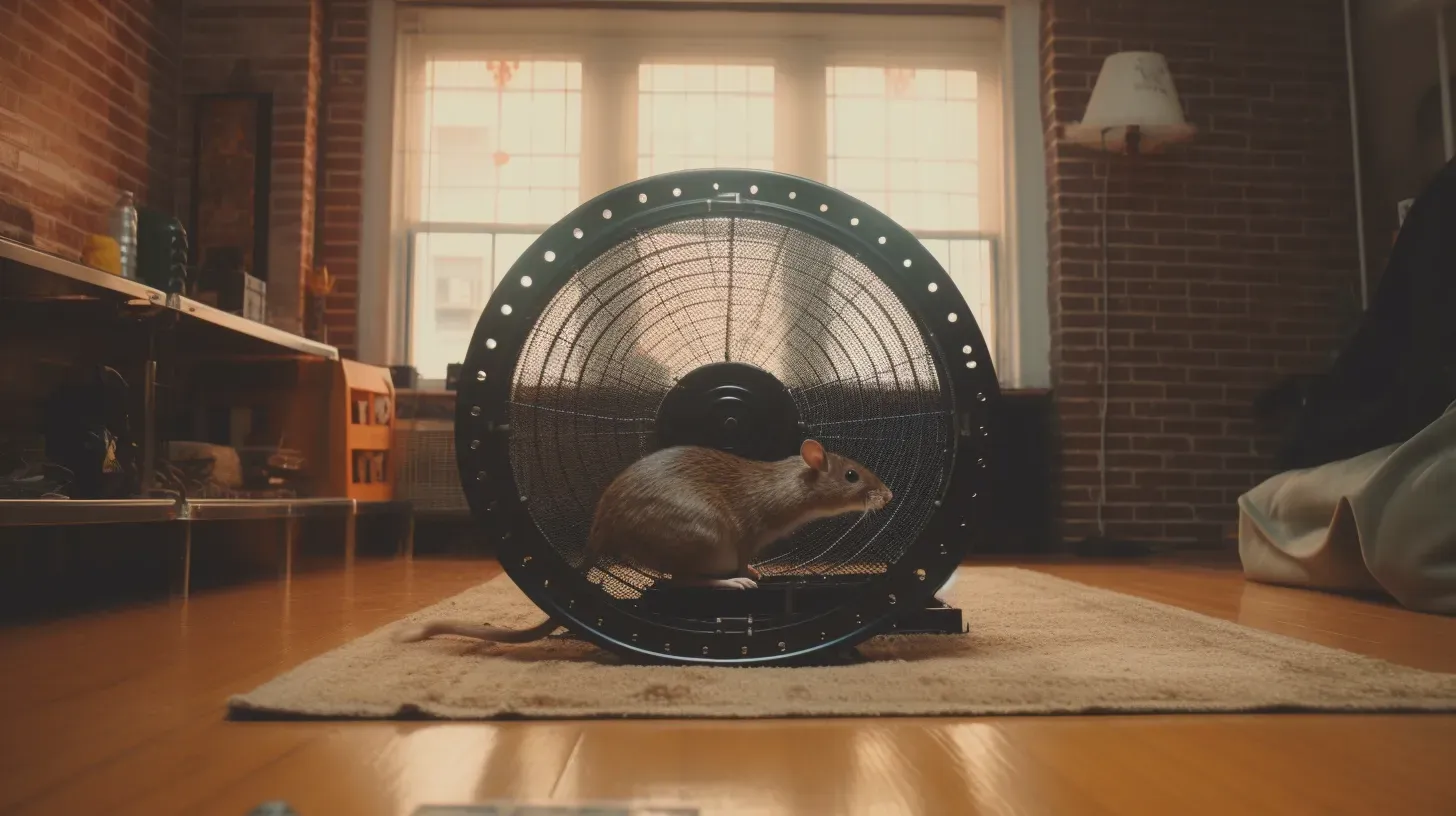

It’s like the 1902 Hanoi Rat Massacre. The French colonial government offered a bounty for rat tails. The goal was to reduce the rat population. But people started cutting off tails and releasing rats to breed more profitable tails. Some even bred rats or smuggled in foreign rats to maximize bounty profits.

The Need for Qualitative Evaluation

While quantitative metrics provide helpful data, they fail to capture the nuanced nature of creative work fully. Quality of work, collaboration, innovative problem-solving — these skills defy pure numerical tracking. An over-reliance on quantitative metrics presents a narrow view, lacking critical context.

So, while data has a role, it must be combined with qualitative reviews by managers familiar with the team. We over-engineer for replaceability. We want generic metrics any manager can apply to all employees. But, this pursuit of standardized data is insufficient and misleading.

It’s key to separate individual performance from overall process improvements.

For the latter, review systems holistically. Define and track KPIs and OKRs. The goal is to improve the process as a whole.

For individuals, assess subjective productivity qualitatively. Methods like peer reviews, self-evaluations, and manager observations capture the nuanced nature of creative work. While imperfect, qualitative evaluation provides the irreplaceable context needed to evaluate individuals accurately.

One of the great examples of the right metrics is 360-degree feedback. This qualitative productivity metric uses feedback from colleagues and co-workers to gauge employee performance. By implementing 360-degree feedback, we can identify areas for improvement and provide targeted support to employees, leading to increased productivity and better teamwork.

Barclays Bank is an example of a company that has seen great results with using the 360-degree feedback system. The bank has effectively employed this system to manage different feedback channels in managing its human resources as part of the employee selection function.

The 360-degree feedback appraisal at Barclays Bank has been reported to improve employee productivity by almost 45%. By using this multi-source assessment tool, the bank has been able to build trust and ensure full support of the review by the employees, ultimately leading to better performance and productivity.

Ultimately, Moneyball provides an inspirational lesson in thinking differently, but its formula doesn’t directly apply to knowledge work industries. Still, with care and balance, data-driven decision-making can improve organizations. Should we abandon metrics for creative fields entirely? Does qualitative evaluation alone capture the complete picture? Likely not. But neither does a purely numbers-driven approach.

So where does that leave leaders seeking to evaluate productivity and boost performance? How can we leverage data while honoring the human aspects of collaboration and innovation? One key is to separate process improvements from individual assessments. Standardized metrics can enhance systems holistically, while qualitative reviews better capture nuanced contributions.

Another question to ask ourselves — are we over-engineering for replaceability? Sometimes, the pursuit of simple, generic metrics misses the mark. While imperfect, a multifaceted approach embracing data and human judgment moves us in the right direction. We can employ the best of both worlds with care, using metrics to inform work, not define it.

Originally published on Medium.com